A shift is taking place in how quantum computing results are judged, moving from speed alone to whether outcomes can be independently verified. Researchers at Google Quantum AI report a breakthrough that focuses on calculations classical supercomputers cannot easily simulate or check, especially in areas linked to chemistry and medicine.

The team targeted problems where classical simulation becomes costly very quickly, even before errors are added. Their aim was to produce results that are stable and repeatable, rather than one-off demonstrations. Hartmut Neven, founder and lead of Google Quantum AI, has focused on this goal after earlier quantum claims were later matched by improved classical methods.

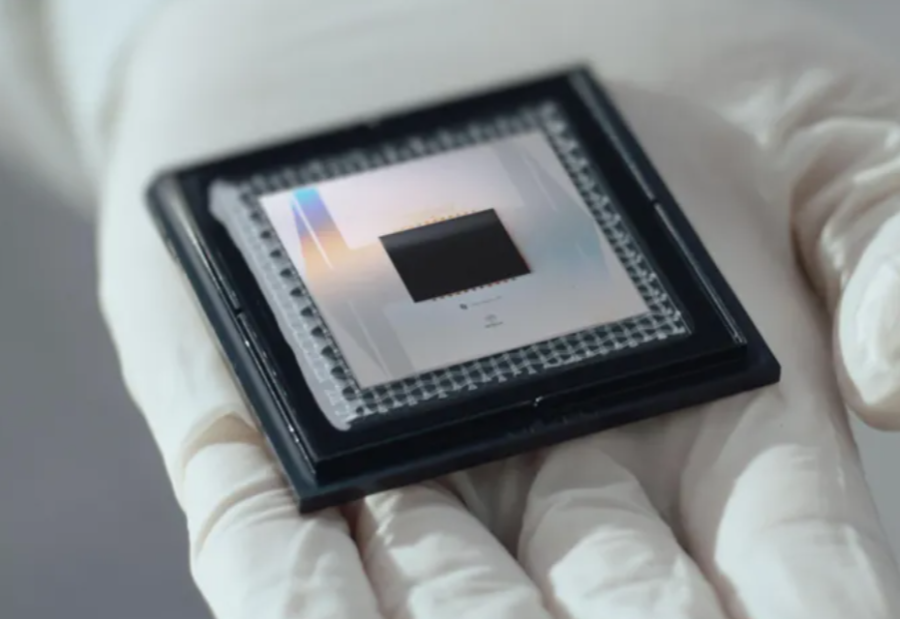

“Our Willow quantum chip demonstrates the first-ever algorithm to achieve verifiable quantum advantage on hardware,” said Neven.

The approach uses a method called Quantum Echoes. The system runs operations forward, flips selected qubits, and then runs the same operations backward. This controlled rewind creates an interferometer inside the chip, where quantum signals can reinforce or cancel each other. Without this rewind, useful phase information would disappear.

Researchers measured an out-of-time-order correlator, or OTOC, which tracks how disturbances spread over time. By running the sequence twice, OTOC(2) kept useful signals alive even when ordinary correlations faded. This showed the chip could still detect small differences between circuit runs, though noise will eventually limit the method.

Inside the processor, the calculation spread into Pauli strings, which describe how local changes move across many qubits. Random phase changes flipped signs on many of these strings, exposing constructive interference when large loops aligned. However, small hardware errors can blur these effects and restrict scaling.

The main challenge was predicting the result on a classical machine. A study estimated that simulating 1 data point with 65 qubits would take about 3.2 years on the Frontier supercomputer. The same task took about 2.1 hours on Google’s quantum hardware. Frontier can perform over a quintillion calculations per second, yet quantum interference still overwhelms it.

The experiment relied on superconducting qubits that stayed coherent long enough to run and reverse long circuits. Errors in each gate reduce the accuracy of the rewind, making stability essential.

Beyond benchmarks, the method can help study real quantum systems through Hamiltonian learning and could improve molecular structure analysis.

“This demonstration of the first-ever verifiable quantum advantage with our Quantum Echoes algorithm marks a significant step toward the first real-world applications of quantum computing,” said Neven.

The next step is repeating the results on other machines and reducing errors further to support useful chemistry calculations.

Also read: Viksit Workforce for a Viksit Bharat

Do Follow: The Mainstream formerly known as CIO News LinkedIn Account | The Mainstream formerly known as CIO News Facebook | The Mainstream formerly known as CIO News Youtube | The Mainstream formerly known as CIO News Twitter

About us:

The Mainstream is a premier platform delivering the latest updates and informed perspectives across the technology business and cyber landscape. Built on research-driven, thought leadership and original intellectual property, The Mainstream also curates summits & conferences that convene decision makers to explore how technology reshapes industries and leadership. With a growing presence in India and globally across the Middle East, Africa, ASEAN, the USA, the UK and Australia, The Mainstream carries a vision to bring the latest happenings and insights to 8.2 billion people and to place technology at the centre of conversation for leaders navigating the future.